Nvidia Driver Azure

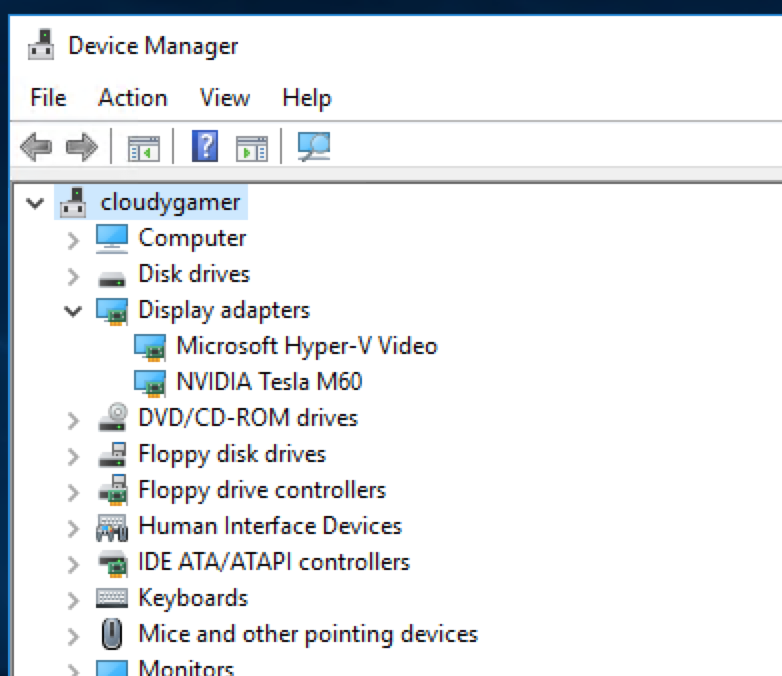

Aug 12, 2019 NVidia driver randomly stops working, and I need to reboot to get it working again, but if fails again after about 5mins using Lightroom. From what I've read elsewhere about the Surface Book 2, there is a significant electrical problem in that there is not enough voltage to. Mar 27, 2016 Introduction to NVIDIA GPUs in Azure. Presented by: Karan Batta, Randy Groves Microsoft Azure will be offering state of the art GPU visualization infrastructure and GPU compute infrastructure for various different scenarios. Download the NVIDIA driver setup file from Azure Blob storage. I put the setup file in blob storage to make sure that this specific one is the one to be used. Download a PowerShell script which. Azure Machine Learning service is the first major cloud ML service to integrate RAPIDS, an open source software library from NVIDIA that allows traditional machine learning practitioners to easily accelerate their pipelines with NVIDIA GPUs. I am using a Windows Server 2016 Datacenter vm, and it seems like the NVIDIA driver has installed correctly (it is displayed fine in the Display Adapters list in the device manager). However, i only ever get one Monitor, a Generic PnP Monitor with Location set to Microsoft Basic Display Driver, which is basically useless for any decent gaming.

I've tried to install CUDA on three different VMs but have been unsuccessful in getting it to recognize my GPU.

I am using an Azure VM (Standard NV6) with an M60 GPU.

With a fresh VM I run the following commands taken from this guide:

It appears to run successful and doesn't indicate that there were any problems. But when I run

I receive the following:

I have tried with 16.04 LTS and various other GPU instances. Google tells me others are using these Azure GPU instances with Tensorflow, so it doesn't appear to be an issue with the graphics card.

Update Drivers Nvidia Windows 10

Finally, I have reviewed what seems to be the canonical guide to installing CUDA on Ubuntu but it fails when running

The message in the log file:

My Question

What is the most reliable method for installing CUDA 8 on Ubuntu 14.04 LTS?

Input Input ISOPBP file. STARTDAT image, use this option when converting from ISO to PBP format.c Convert ISOPBP file when starting the tool.f Output ISOPBP filename. Mar 31, 2009 POPSLOADER only recognizes eboot.pbp no iso you would have to try and find a way to recode popsloader to read from a different directory and redo it's decryption to do.iso instead of.pbp which would be a waste of time, there shouldn't be a problem, w/ having it be like this GAME/ff7/eboot.pbp. Sep 29, 2019 I was wondering if I could change my PSX to PSP converted game from an EBOOT.PBP and BIN files into an ISO so I can then convert it into a CSO, saving me at least a little space.

Are there any special precauations that I need to take when running CUDA on a VM?

Edit: Additional Info

uname -a returns

lsmod returns

1 Answer

The official Azure documentation points out:

Currently, Linux GPU support is only available on Azure NC VMs running Ubuntu Server 16.04 LTS.+

I'm not sure why they even let you create GPU instances with 14.04 installed, but hopefully this will help spread the word.

After creating a fresh 16.04 instance I did the following:

First, I had to uninstall/blacklist the Nouveau drivers that come pre-installed on Ubuntu 16.04. They're not compatible with the NVIDIA drivers we're trying to install and will cause errors later on if we don't remove them.

At the bottom of the file add the following entries:

Reboot VM with sudo reboot

Nvidia Geforce Experience

I downloaded the drivers directly from Microsoft, but you can substitute with your preferred source:

I just clicked through the default selected options in the runfile.

Verify driver installation by running nvidia-smi

Printer Technical Support & Drivers. For Product Support & Drivers, please click here, select your country or region, then select your product, and for most products you will be taken directly to the Support page for that product on the website of the Epson Sales Company that is responsible for your country or region. Official Epson® support and customer service is always free. Download drivers, access FAQs, manuals, warranty, videos, product registration and more. Epson l565 printer and scanner drivers. Feb 16, 2017 Epson Scanner Drivers Download by Epson America, Inc. After you upgrade your computer to Windows 10, if your Epson Scanner Drivers are not working, you can fix the problem by updating the drivers. It is possible that your Scanner driver is not compatible with the newer version of Windows. Download Epson Scanner Driver Update Utility.

Install CUDA Toolkit 8

Not the answer you're looking for? Browse other questions tagged 14.04nvidiacuda or ask your own question.

Posted on March 20, 2019

With ever-increasing data volume and latency requirements, GPUs have become an indispensable tool for doing machine learning (ML) at scale. This week, we are excited to announce two integrations that Microsoft and NVIDIA have built together to unlock industry-leading GPU acceleration for more developers and data scientists.

- Azure Machine Learning service is the first major cloud ML service to integrate RAPIDS, an open source software library from NVIDIA that allows traditional machine learning practitioners to easily accelerate their pipelines with NVIDIA GPUs

- ONNX Runtime has integrated the NVIDIA TensorRT acceleration library, enabling deep learning practitioners to achieve lightning-fast inferencing regardless of their choice of framework.

These integrations build on an already-rich infusion of NVIDIA GPU technology on Azure to speed up the entire ML pipeline.

“NVIDIA and Microsoft are committed to accelerating the end-to-end data science pipeline for developers and data scientists regardless of their choice of framework,” says Kari Briski, Senior Director of Product Management for Accelerated Computing Software at NVIDIA. “By integrating NVIDIA TensorRT with ONNX Runtime and RAPIDS with Azure Machine Learning service, we’ve made it easier for machine learning practitioners to leverage NVIDIA GPUs across their data science workflows.”

Azure Machine Learning service integration with NVIDIA RAPIDS

Azure Machine Learning service is the first major cloud ML service to integrate RAPIDS, providing up to 20x speedup for traditional machine learning pipelines. RAPIDS is a suite of libraries built on NVIDIA CUDA for doing GPU-accelerated machine learning, enabling faster data preparation and model training. RAPIDS dramatically accelerates common data science tasks by leveraging the power of NVIDIA GPUs.

Exposed on Azure Machine Learning service as a simple Jupyter Notebook, RAPIDS uses NVIDIA CUDA for high-performance GPU execution, exposing GPU parallelism and high memory bandwidth through a user-friendly Python interface. It includes a dataframe library called cuDF which will be familiar to Pandas users, as well as an ML library called cuML that provides GPU versions of all machine learning algorithms available in Scikit-learn. And with DASK, RAPIDS can take advantage of multi-node, multi-GPU configurations on Azure.

Learn more about RAPIDS on Azure Machine Learning service or attend the RAPIDS on Azure session at NVIDIA GTC.

ONNX Runtime integration with NVIDIA TensorRT in preview

We are excited to open source the preview of the NVIDIA TensorRT execution provider in ONNX Runtime. With this release, we are taking another step towards open and interoperable AI by enabling developers to easily leverage industry-leading GPU acceleration regardless of their choice of framework. Developers can now tap into the power of TensorRT through ONNX Runtime to accelerate inferencing of ONNX models, which can be exported or converted from PyTorch, TensorFlow, MXNet and many other popular frameworks. Today, ONNX Runtime powers core scenarios that serve billions of users in Bing, Office, and more.

With the TensorRT execution provider, ONNX Runtime delivers better inferencing performance on the same hardware compared to generic GPU acceleration. We have seen up to 2X improved performance using the TensorRT execution provider on internal workloads from Bing MultiMedia services.

To learn more, check out our in-depth blog on the ONNX Runtime and TensorRT integration or attend the ONNX session at NVIDIA GTC.

Accelerating machine learning for all

Our collaboration with NVIDIA marks another milestone in our venture to help developers and data scientists deliver innovation faster. We are committed to accelerating the productivity of all machine learning practitioners regardless of their choice of framework, tool, and application. We hope these new integrations make it easier to drive AI innovation and strongly encourage the community to try it out. Looking forward to your feedback!